Wikimedia servers Contents System architecture Hosting Status and monitoring Energy use See also Historical information Information about other web sites References Navigation menueditWikimedia’s technical blogphabricatorUnited Layerserver rolesracktables toolcompact table of all servers grouped by typeGrafanaData center overviewper-subcluster dropdowntotal numbers without graphsIcingaNetworking latencyconnectWebsitemaderenewedWebsiteWebsiteWebsiteWebsiteYour donations at work: new servers for WikipediaAlexa traffic rankMySQLCon 2004OSCON 2004LISA 2004MySQLCon 2005Brad Fitzpatricklj_backendlj_maintenanceGoogle cluster architectureMediaWiki analysisMediaWiki WMF-supported extensions analysis"Wikipedia Adopts MariaDB — Wikimedia blog"

Wikimedia hardwareWikimedia servers administration

deesfritjakozhukWikipediaWikimedia projectsWikimedia’s technical blogphabricatorNetwork topologyNetwork design2013 Datacenter RfCbecame the primary data centerTampa, FloridaWMF 2010–2015 strategic plan reach targetin-kind donationscurrent list of benefactorsserver rolesracktables toolcompact table of all servers grouped by typeno longer publicly availablepuppet configurationSustainability Initiative

Wikimedia servers

Jump to navigation

Jump to search

- About Meta

- Discussion pages

- Request pages

- Policies and guidelines

- Information and statistics

- Categories

- Help pages

Participate:

- How to edit a page

- Meta-Wiki discussion page

- Meta-l mailing list

Other languages:

de,

es,

fr,

it,

ja,

ko,

zh,

uk

Wikipedia and the other Wikimedia projects are run from several racks full of servers. See also Wikimedia’s technical blog and phabricator.

Contents

1 System architecture

1.1 Network topology

1.2 Software

2 Hosting

2.1 History

3 Status and monitoring

4 Energy use

5 See also

5.1 More hardware info

5.2 Admin logs

5.3 Offsite traffic pages

5.4 Long-term planning

6 Historical information

7 Information about other web sites

8 References

System architecture

Network topology

The Network topology is described in "Network design" at Wikitech.

Software

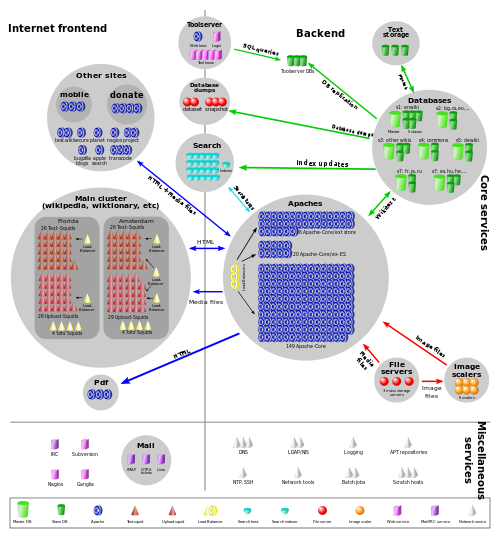

Simplified overview of the employed software as of October 2015. (A very complex LAMP "stack")

- Our DNS servers run gdnsd. We use geographical DNS to distribute requests between our four data centers (3x US, 1x Europe) depending on the location of the client.

- We use Linux Virtual Server (LVS) on commodity servers to load balance incoming requests. LVS is also used as an internal load balancer to distribute MediaWiki requests. For back end monitoring and failover, we have our own system called PyBal.

- For regular MediaWiki web requests (articles/API) we use Varnish caching proxy servers in front of Apache HTTP Server.

- All our servers run either Debian or Ubuntu Server.

- For distributed object storage we use Swift.

- Our main web application is MediaWiki, which is written in PHP (~70 %) and JavaScript (~30 %).[1]

- Our structured data is stored in MariaDB since 2013.[2] We group wikis into clusters, and each cluster is served by several MariaDB servers, replicated in a single-master configuration.

- We use Memcached for caching of database query and computation results.

- For full-text search we use Elasticsearch (Extension:CirrusSearch).

https://noc.wikimedia.org/ – Wikimedia configuration files.

Overview of system architecture

Wikimedia server racks at CyrusOne

Hosting

.mw-parser-output .mw-tpl-seealsofont-style:italic;padding-bottom:0.4em;padding-left:0;margin-bottom:0.4em;margin-left:1em;color:#555;border-bottom:1px solid #ccc

As of May 2018, we have the following colocation facilities (each name is derived from an acronym of the facility’s company and an acronym of a nearby airport):

- eqiad

- Application services (primary) at Equinix in Ashburn, Virginia (Washington, DC area).

- codfw

- Application services (secondary) at CyrusOne in Carrollton, Texas (Dallas-Fort Worth area).

- esams

- Caching at EvoSwitch in Amsterdam, the Netherlands.

- ulsfo

- Caching at United Layer in San Francisco.

eqsin

- Caching at Equinix in Singapore.

History

The backend web and database servers are in Ashburn, with Carrollton to handle emergency fallback in the future. Carrollton was chosen for this as a result of the 2013 Datacenter RfC. At EvoSwitch, we have a Varnish cache cluster and several miscellaneous servers. The Kennisnet location is now used only for network access and routing.

Ashburn (eqiad) became the primary data center in January 2013, taking over from Tampa (pmtpa and sdtpa) which had been the main data centre since 2004. Around April 2014, sdtpa (Equinix – formerly Switch and Data – in Tampa, Florida, provided networking for pmtpa) was shut down, followed by pmtpa (Hostway – formerly PowerMedium – in Tampa, Florida) in October 2014.

In the past we've had other caching locations like Seoul (yaseo, Yahoo!) and Paris (lopar, Lost Oasis); the WMF 2010–2015 strategic plan reach target states: "additional caching centers in key locations to manage increased traffic from Latin America, Asia and the Middle East, as well as to ensure reasonable and consistent load times no matter where a reader is located."

EvoSwitch and Kennisnet are recognised as benefactors for their in-kind donations. See the current list of benefactors.

A list of servers and their functions used to be available at the server roles page; no such list is currently maintained publicly (perhaps the private racktables tool has one). It used to be possible to see a compact table of all servers grouped by type on icinga, but this is no longer publicly available. The puppet configuration provides a pretty good reference for software what each server runs however.

Status and monitoring

You can check one of the following sites if you want to know if the Wikimedia servers are overloaded, or if you just want to see how they are doing.

Grafana

Data center overview with total bandwidth, non-idle CPU and load per group of servers; versions also exist with a per-subcluster dropdown and total numbers without graphs

Icinga (private)- Networking latency

If you are seeing errors in real time, visit #wikimedia-techconnect on irc.freenode.net. Check the topic to see if someone is already looking into the problem you are having. If not, please report your problem to the channel. It would be helpful if you could report specific symptoms, including the exact text of any error messages, what you were doing right before the error, and what server(s) are generating the error, if you can tell.

Energy use

The Sustainability Initiative aims at reducing the environmental impact of the servers by calling for renewable energy to power them.

The Wikimedia Foundation's servers are spread out in four colocation data centers in Virginia, Texas and San Francisco in the United States, and Amsterdam in Europe. As of May 2016, the servers use 222 kW, summing up to about 2 GWh of electrical energy per year. For comparison: An average household in the United States uses 11 MWh/year, the average for Germany is 3 MWh/year.

Only the few servers in Amsterdam run on renewable energy, the other use different conventional energy mixes. Overall, just 9% of Wikimedia Foundation data centers' energy comes from renewable sources, with the rest split evenly between coal, gas and nuclear power (34%, 28%, and 28%, respectively) . The bulk of the Wikimedia movement's electricity demand is in Virginia and Texas, which unfortunately have both very fossil fuel heavy grids.

| Server name | Data center location | Provider | Date opened | Average energy | Energy sources | Carbon footprint (CO2/year) | Renewable option |

|---|---|---|---|---|---|---|---|

| eqiad | Ashburn, VA 20146-20149 | Equinix (Website) | February 2011 | May 2016: 130 May 2015: 152 | 32% coal 20% natural gas 25% nuclear 17% renewable | 1,040,000 lb = 520 short tons = 470 metric tons = 0.32 * 130 kW * 8765.76 hr/yr * 2.1 lb CO2/kWh for coal + 0.20 * 130 kW * 8765.76 hr/yr * 1.22lb CO2/kWh for nat gas + 0.25 * 130 kW * 8765.76 hr/yr * 0 lb CO2/kWh for nuclear + 0.17 * 130 kW * 8765.76 hr/yr * 0 lb CO2/kWh for renewable | In 2015, Equinix made "a long-term commitment to use 100 percent clean and renewable energy". In 2017, Equinix renewed this pledge. |

| codfw | Carrollton, TX 75007 | CyrusOne (Website) | May 2014 | May 2016: 77 May 2015: 70 | 23% coal 56% natural gas 6% nuclear 1% hydro/biomass/solar/other 14% wind (Oncor/Ercot) | 790,000 lb = 400 short tons = 360 metric tons = 0.23 * 77 kW * 8765.76 hr/yr * 2.1 lb CO2/kWh for coal + 0.56 * 77 kW * 8765.76 hr/yr * 1.22lb CO2/kWh for nat gas + 0.06 * 77 kW * 8765.76 hr/yr * 0 lb CO2/kWh for nuclear + 0.15 * 77 kW * 8765.76 hr/yr * 0 lb CO2/kWh for renewables | ? |

| esams | Haarlem 2031 BE | EvoSwitch (Website) | December 2008 | May 2016: < 10 May 2015: 10 | "a combination of wind power, hydro and biomass" | 0 | n.a. |

| ulsfo | San Francisco, CA 94124 | UnitedLayer (Website) | June 2012 | May 2016: < 5 May 2015: < 5 | 25% natural gas 23% nuclear 30% renewable 6% hydro 17% unspecified (PG&E) | 13,000 lb = 6.7 short tons = 6.1 metric tons (+ unspecified) = 0.00 * 5 kW * 8765.76 hr/yr * 2.1 lb CO2/kWh for coal + 0.25 * 5 kW * 8765.76 hr/yr * 1.22lb CO2/kWh for nat gas + 0.23 * 5 kW * 8765.76 hr/yr * 0 lb CO2/kWh for nuclear + 0.36 * 5 kW * 8765.76 hr/yr * 0 lb CO2/kWh for hydro/renewable + 0.17 * 5 kW * 8765.76 hr/yr * ? lb CO2/kWh for unspecified | ? |

| eqsin | Singapore | Equinix (Website) | ? | ? | ? | ? | ? |

See also

More hardware info

- Technical FAQ – How about the hardware?

Your donations at work: new servers for Wikipedia, by Brion Vibber, 02-12-2009

wikitech:Clusters – technical and usually more up-to-date information on the Wikimedia clusters

Admin logs

Server admin log – Documents server changes (especially software changes)

Offsite traffic pages

- Alexa traffic rank

Long-term planning

- wikitech-l mailing list

- wikitech.wikimedia.org

Historical information

Cache strategy (draft from 2006)

PHP caching and optimization (draft from 2007)- Hardware orders up to 2007

Information about other web sites

LiveJournal (livejournal.com):- 04/2004 MySQLCon 2004 PDF/SXI

- 07/2004 OSCON 2004 PDF/SXI

- 11/2004 LISA 2004 PDF/SXI

- 04/2005 MySQLCon 2005 PDF/PPT/SXI

- Blogs to follow for system details: Brad Fitzpatrick, lj_backend, and lj_maintenance.

Google Search (google.com):

Google cluster architecture (PDF)

References

↑ See MediaWiki analysis, MediaWiki WMF-supported extensions analysis.

↑ "Wikipedia Adopts MariaDB — Wikimedia blog" (text/html). blog.wikimedia.org. Wikimedia Foundation, Inc. 2013-04-22. Retrieved 2014-07-20.

Categories:

- Wikimedia hardware

- Wikimedia servers administration

(window.RLQ=window.RLQ||[]).push(function()mw.config.set("wgPageParseReport":"limitreport":"cputime":"0.140","walltime":"0.199","ppvisitednodes":"value":662,"limit":1000000,"ppgeneratednodes":"value":0,"limit":1500000,"postexpandincludesize":"value":9341,"limit":2097152,"templateargumentsize":"value":390,"limit":2097152,"expansiondepth":"value":11,"limit":40,"expensivefunctioncount":"value":6,"limit":500,"unstrip-depth":"value":0,"limit":20,"unstrip-size":"value":1927,"limit":5000000,"entityaccesscount":"value":0,"limit":400,"timingprofile":["100.00% 137.187 1 -total"," 51.28% 70.346 1 Template:TNT"," 22.79% 31.262 1 Template:Cite_web"," 16.67% 22.867 1 Template:See_also"," 12.95% 17.769 1 Template:Tnavbar/en"," 10.98% 15.057 2 Template:TNTN"," 10.05% 13.794 1 Template:Tnavbar/layout"," 6.35% 8.707 1 Template:Channel"," 6.03% 8.270 1 Template:LangSwitch"," 5.63% 7.720 3 Template:Dir"],"scribunto":"limitreport-timeusage":"value":"0.044","limit":"10.000","limitreport-memusage":"value":1422191,"limit":52428800,"cachereport":"origin":"mw1280","timestamp":"20190307130312","ttl":2592000,"transientcontent":false);mw.config.set("wgBackendResponseTime":95,"wgHostname":"mw1239"););